The earliest days of 2026 are an opportune time to take stock of AI results of the past year, evaluate what worked and didn’t work, and map plans to position a business for enterprise success in 2026.

To that end, I recently analyzed recommendations from PwC on how to drive ROI from AI initiatives in 2026; the firm placed significant focus on leadership and workforce factors in driving success. It’s worth noting those recommendations were based on PwC’s view that AI results have fallen short to date. In addition, PwC calls for human and technical focus on AI agent orchestration for governance, controls, and data protection.

Building on that last topic of orchestration, I’d like to break down a separate set of recommendations published by Deloitte, which emphasizes the need to focus on – and optimize – AI agent operations and observability. These two items, of course, relate closely to orchestration and governance, but Deloitte goes deep with the ops and observability focus.

Why Agent Ops, Observability Are Needed

Deloitte highlights the rise of multiagent AI systems and their role in shifting humans away from execution of tasks supported by agents to supervision of work executed by agents.

From this analyst’s perspective, the multi-agent model is indeed exploding and it’s being accelerated by the rise of Model Context Protocol (MCP), which allows diverse agents and back-end systems to connect and communicate efficiently using a common specification.

While multi-agent systems introduce new productivity, speed, and scalability options, they also give rise to the need for strong oversight, operational focus, and observability of how agents are operating.

This process of developing and managing agent operations, Deloitte notes, requires in-depth decomposition of business processes to clearly identify roles and activities that make up a workflow, as well as the KPIs and observability functions needed to optimize the performance of agents.

To accurately decompose a process for agent performance, Deloitte says that customers need:

- Data quality and context

- Continuous improvement

- Humans on the loop for imagination, adaptive thinking, and emotional intelligence

- Change management practices

- Ethical focus

Once a process has been decomposed, Deloitte recommends five categories of KPIs and notes interdependencies between these KPIs that require they all be in place to evaluate agent performance effectively. The five categories with an example in each case are:

- Cost – how much compute power is consumed per task

- Speed – amount of time required to generate a response

- Productivity – average task handling time

- Quality – the agent’s track recording in selecting the optimal tool for a given task

- Trust – feedback scores measuring how users perceive the agent’s understanding their requirements

To effectively measure this range of interconnected KPIs, a company needs systems to collect comprehensive data, manage that data securely, understand why and how agents act in real time, then monitor agent activities over time. The way to deliver these functions: an agent operations model, powered by observability technology.

The clearest way to understand the role and value of agent operations is to consider it as a performance and risk management function for humans and AI teams. Leaning on agent observability, agent operations can deliver alerts and insights into operational performance and business impact of multi-agent AI estates.

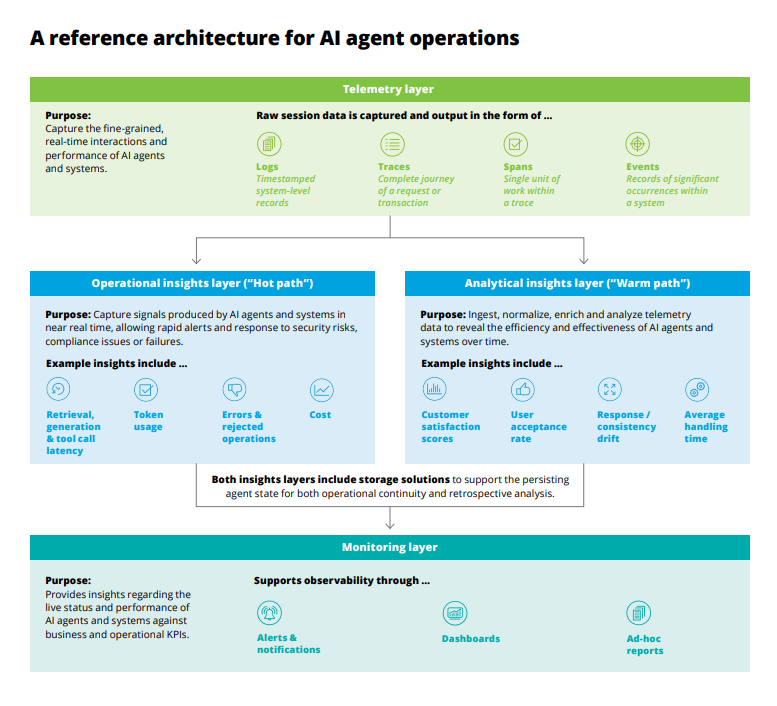

In Deloitte’s view, agent operations isn’t a set of tools but rather an ecosystem of intertwined capabilities built on a reference architecture.

And it’s useful to consider that this architecture (see diagram) captures signals or telemetry in two ways: immediately for observability of real-time risks (“hot path”) and observability of patterns over time for analytical analysis (“warm path”).

Examples of real-world agents that support an AI agent operations model include an interface agent that interacts directly with users, an incident classification agent that identifies the nature of an issue, an incident analysis agent that isolates likely causes of an issue, and an incident resolution agent. A clear-cut example of where these agents could be employed is in an IT support use case.

Concluding Thoughts

As the links below indicate, the vendor community is moving quickly to deliver operational management, governance, and observability of AI agents. Yet it’s helpful to step back and evaluate this technology, and agent oversight more broadly, from the perspective of an overall strategy that’s built to address the business challenge first, then evaluate tools and how they support that strategy. Deloitte’s analysis is one good reference point as companies begin the process of developing an overall framework.

Related Insights:

- With Agent 365 and Security Tools, Microsoft Equips Customers to Govern AI Agent Estates

- Microsoft Simplifies Agent/Development Orchestration

- With AI Infusion, Microsoft Positions Sentinel as Unifying Security Platform

- PwC Updates AI Agent Orchestrator

- Cisco Software Scans MCP Assets to Secure AI Supply Chain

AI Agent & Copilot Summit is an AI-first event to define opportunities, impact, and outcomes with Microsoft Copilot and agents. Building on its 2025 success, the 2026 event takes place March 17-19 in San Diego. Get more details.